Download Installation Hybrid GSF Tuning

High Performance Computing clusters (HPC) are an essential tool owing to they are an excellent platform for solving a wide range of problems through parallel and distributed applications. Nonetheless, HPC clusters consume large amounts of energy, which combined with notably increasing electricity prices are having an important economical impact, forcing owners to reduce operation costs.

To reduce the high-energy consumptions of HPC clusters, we have developed the software tool EECluster for managing the energy-efficient allocation of the cluster resources. To do so, EECluster uses a Hybrid Genetic Fuzzy System as the decision-making mechanism that elicits part of its rule base dependent on the cluster workload scenario, delivering good compliance with the administrator preferences. In the latest version, we leverage a more sophisticated and exhaustive model that covers a wider range of environmental aspects and balances service quality and power consumption with all indirect costs, including hardware failures and subsequent replacements, measured in both monetary units and carbon emissions.

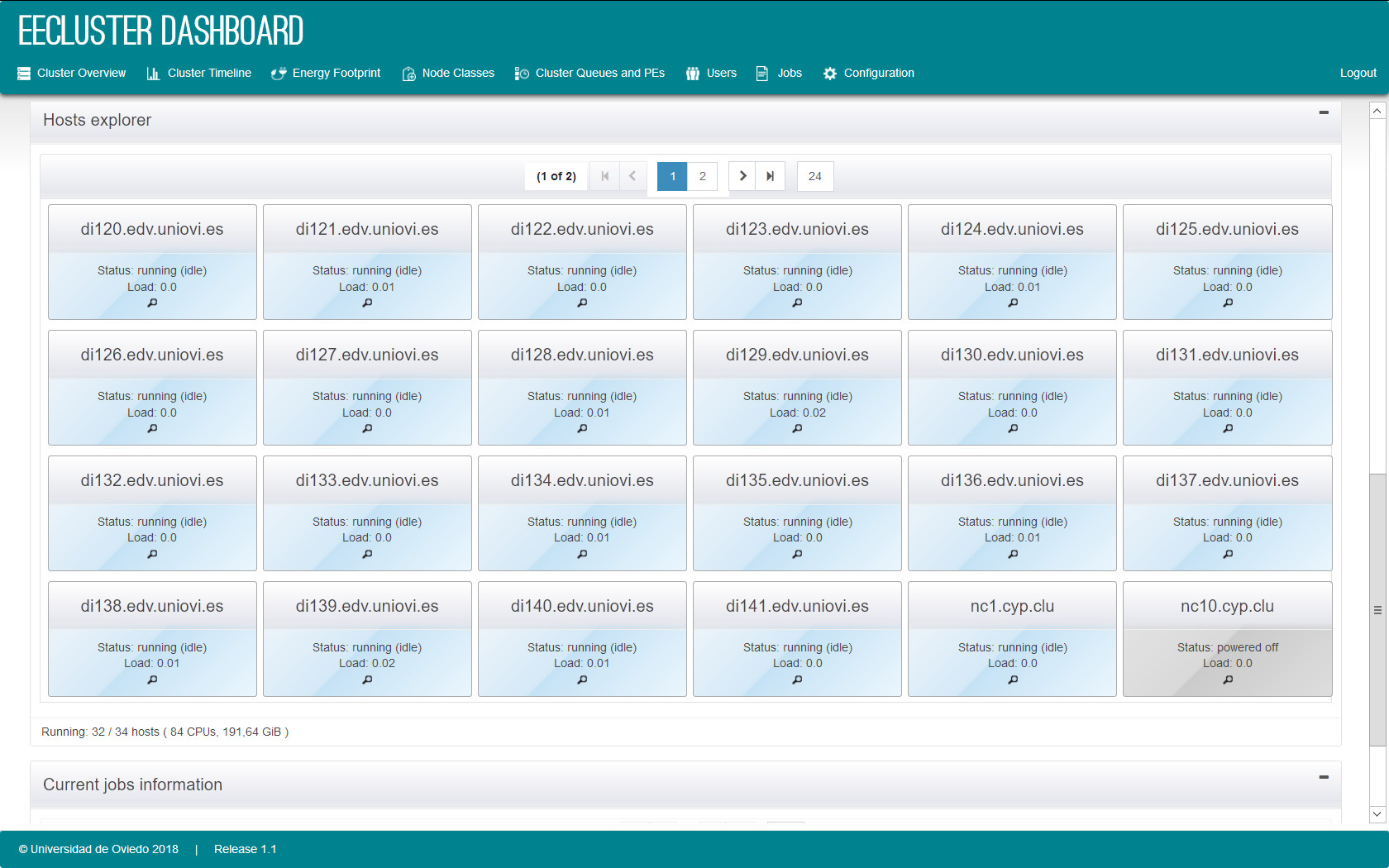

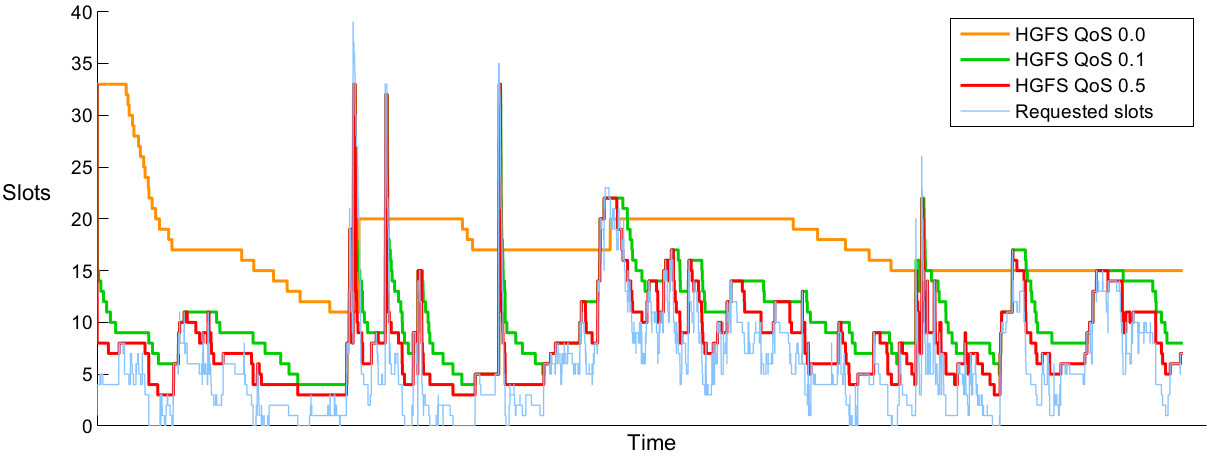

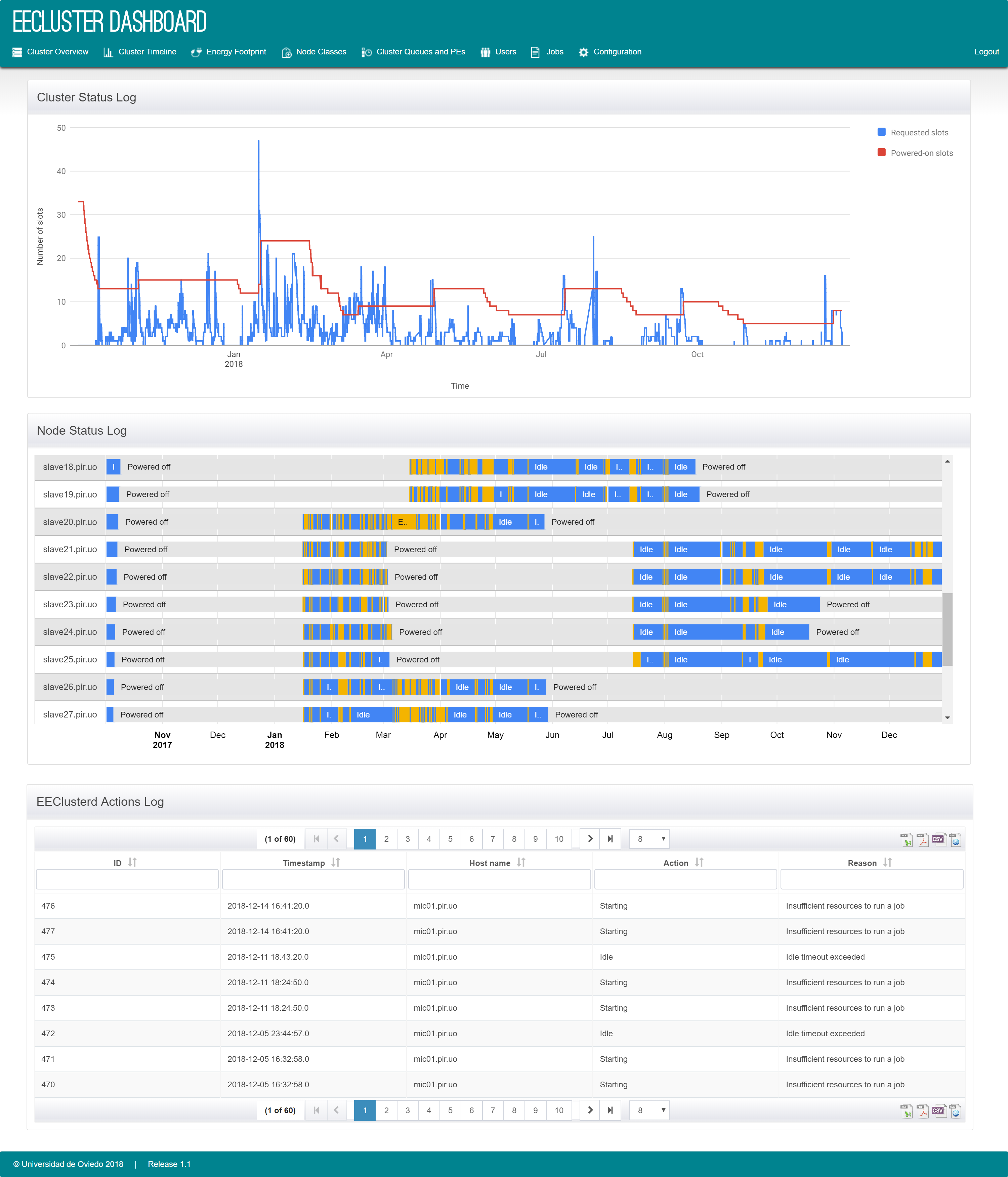

Figure 1 shows a snapshot of the EECluster dashboard and Figure 2 illustrates graphically EECluster´s behaviour under different sets of administrator preferences, from having no impact on the QoS to a controlled increase on the jobs waiting times and achieving extraordinary energy savings. As can be seen, the HGFS used as the decision-making mechanism in the EECluster tool can produce very different behaviours, thus adapting to any desired set of preferences that the cluster administrator may have at any given moment.

Also experimental studies have been made using workloads from the Scientific Modelling Cluster at Oviedo University to evaluate the results obtained with the adoption of this tool. In 2015, a Technology Transfer contract has been signed with ASAC Comunicaciones, a company that has one of the few TIER III clusters certified by the Uptime Institute in Spain.

Once running, EECluster will synchronize with the cluster status. This information can be accessed through the administration dashboard via web browser in the URL http://<hostname:port>/EECluster. The default user created during the installation process can be used to log in. EECluster users are managed from the configuration section of the dashboard.

The main page displays the status and load of each of the compute nodes, and the queued and running jobs. This page also allows the administrator to power on or off manually every compute node and to view all its information.

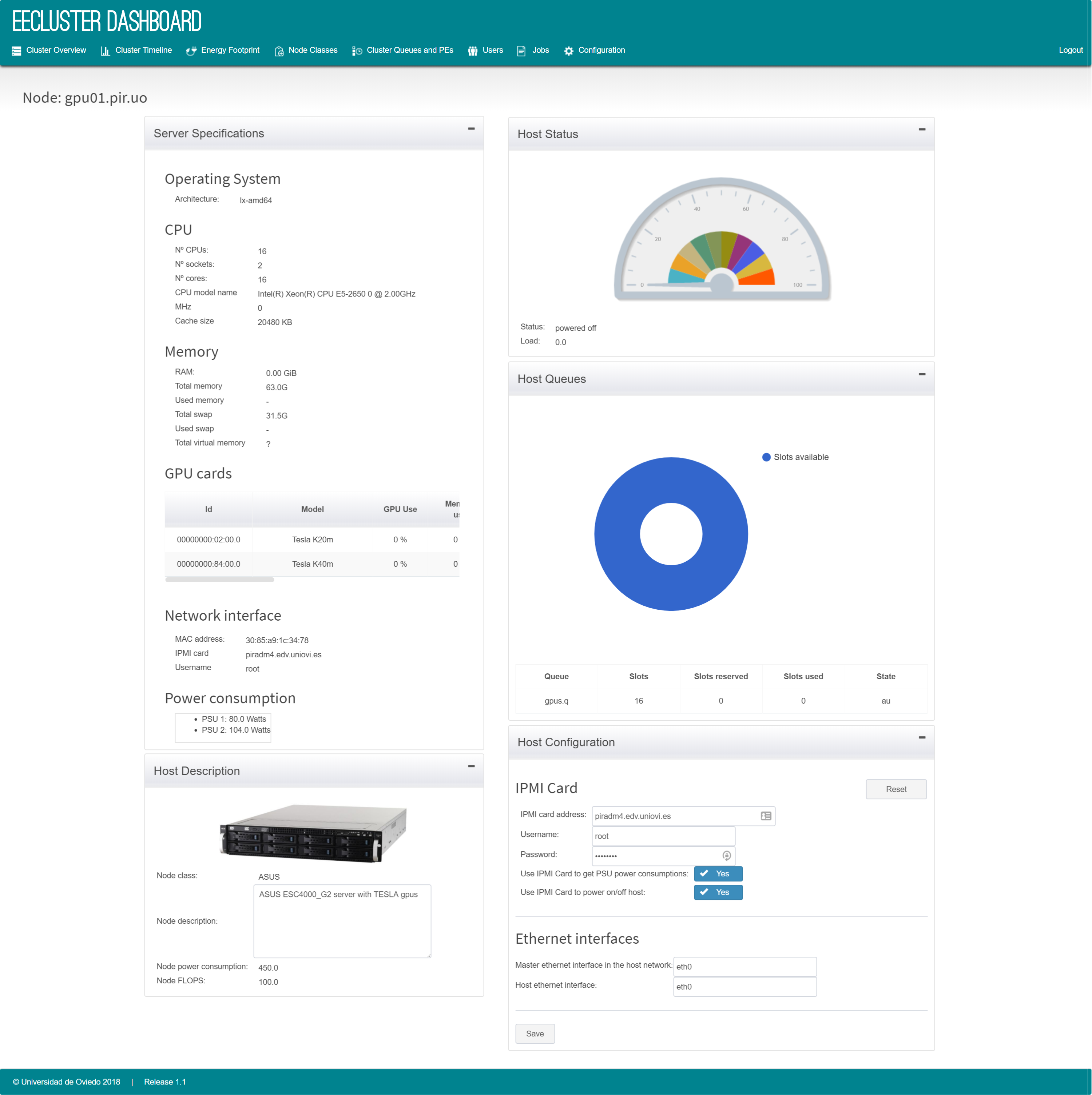

The compute node page displays its Operating System, CPUs, Memory, GPU cards, Intel MIC cards, PSU power consumptions, load, etc., and also allows the administrator to configure the IPMI card of the node.

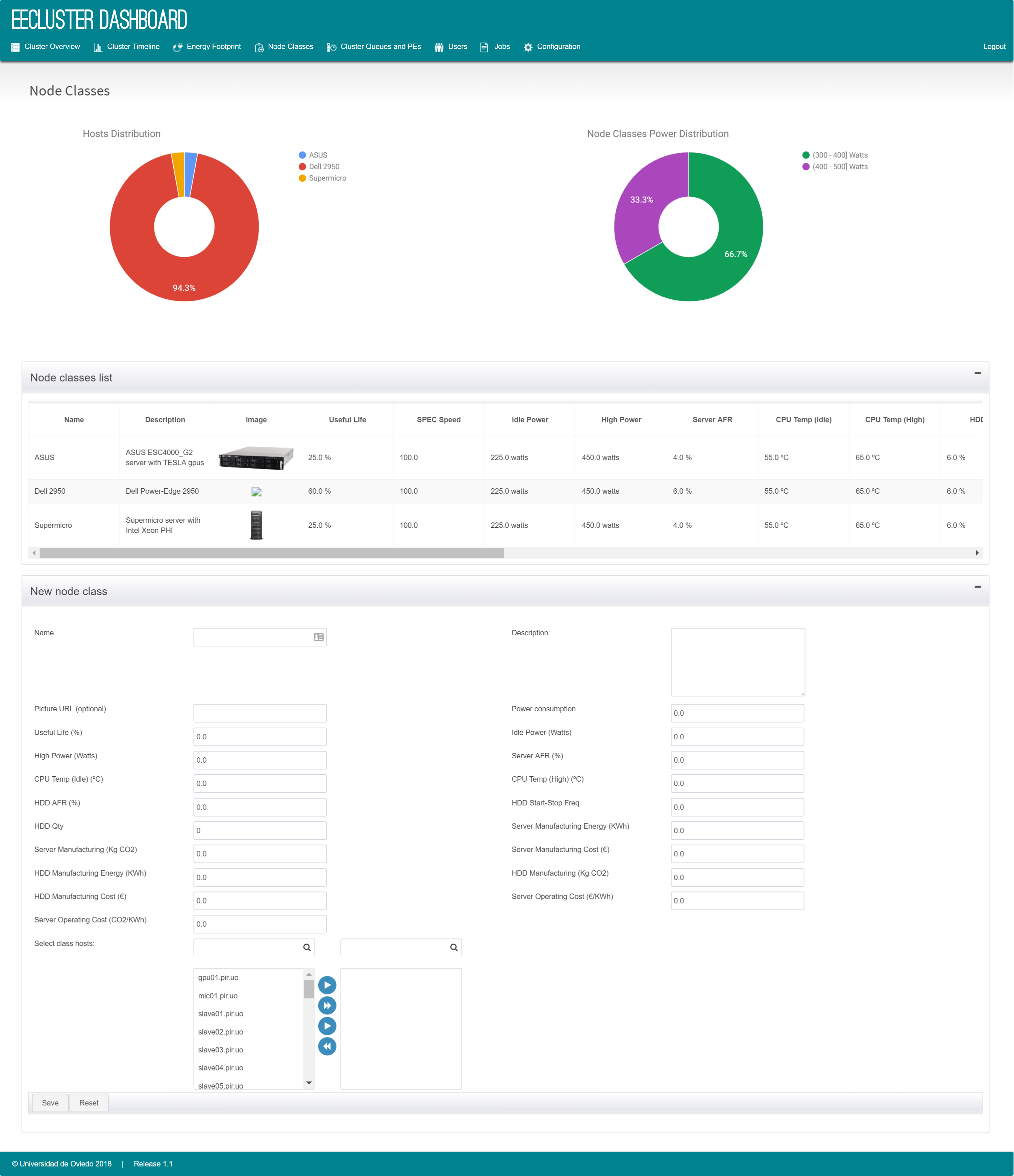

The node classes page is used to configure a number of compute node classes, each one including a name, brief description, picture, FLOPS, power consumption (which is used to calculate the node efficiency if no IPMI card is available to monitor PSU actual consumptions), and the subset of the cluster nodes that belong to the class.

The users and jobs page displays information about every cluster user and every job submitted to the RMS, respectively. Both pages also show charts that summarises the information displayed in the tables.

The statistics page displays records of every decision that the HGFS has made, along with charts showing the evolution of the cluster workloads.